Vibe Coding

Vibe Coding: The Rise of the AI Wizard, or the Dawn of Software Doomsday?

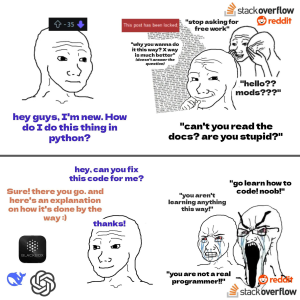

Introduction: Ah, vibe coding—a term fresh out of the AI hype oven, served sizzling hot by none other than Andrej Karpathy in early 2025. Imagine telling a large language model (LLM) to "whip me up a web app that tracks catnip prices and sends alerts when it’s on sale," and bam! Lines of code materialize like a magician pulling rabbits from a hat. It’s programming reimagined as a séance, where developers whisper prompts to an AI and hope it conjures functional software from the digital ether.

It’s the future, baby! Or at least, it’s what tech evangelists are selling. But let’s not kid ourselves—vibe coding is to software development what paint-by-numbers is to fine art. Sure, it can slap colors on the canvas, but don’t ask it to reinvent Van Gogh. The reality is a bit more... cursed.

The AI Wizard Fantasy: The promise of vibe coding sounds too good to be true: anyone can code! No more slaving away over syntax errors or arcane compiler tantrums—just vibe, describe, and let the AI handle the rest. Amateur programmers and tech bros alike are lining up for a piece of the magic. They dream of writing apps without needing to understand pesky details like algorithms, data structures, or cybersecurity. After all, who cares about SQL injection when your LLM-generated app can serve cocktails and NFTs on the blockchain, right?

The problem is, that’s the kind of thinking that’ll land you on the front page of "Hackers Steal 10 Million User Accounts Due to Vibe Coding Blunder" faster than you can say “credential stuffing.”

From Code to Chaos: The Security Nightmare of Vibe Coding Vibe coding is an express ticket to introducing security vulnerabilities in production software—no stops, no refunds. When the human writing the prompt has no real grasp of software engineering principles, the AI-generated code ends up like a sandcastle: looks fine until a wave of malicious requests crashes in.

Here's the rub: LLMs are not cybersecurity experts. They’re statistical parrots trained to autocomplete based on mountains of existing code. This means they might cough up outdated, insecure, or plain wrong practices—like defaulting to eval() or using weak encryption. And unless the prompter knows how to vet and refine that code (hint: most won’t), they’ll happily deploy an app riddled with security holes.

The AI might output something like:

# Quick and dirty user input example

username = input("Enter username: ")

password = input("Enter password: ")

login(username, password)

Cute! Until you realize it has zero protection against SQL injection or brute-force attacks. But hey, it vibes, right?

Not a Real Job: The Vibe Coder Dilemma Here’s the uncomfortable truth: vibe coding isn’t a job—it’s a hobby. It’s like calling yourself a chef because you can microwave instant noodles. There’s a reason software engineers study for years—because writing reliable, secure, and scalable software is hard. If vibe coders are a replacement for software developers, then karaoke singers are about to headline Coachella.

And what happens when these vibe coders are dropped into a real job setting? They’ll face codebases spanning hundreds of thousands of lines, DevOps pipelines, test frameworks, and requirements that won’t just vibe away. Debugging, refactoring, and understanding complex logic demands skills no AI can teach on-the-fly.

The illusion of productivity crumbles fast when the vibe coder’s LLM-generated app collapses under real-world conditions. If vibe coding were an RPG, it’d be a level 1 wizard trying to solo a dragon—lots of flashy spells but very dead by turn two.

A Recipe for Technical Debt: The industry is on a crash course for the worst kind of technical debt—one that won’t reveal itself until it’s too late. Vibe coding’s reckless shortcuts will leave software landmines waiting to explode in production environments. Bugs, vulnerabilities, and broken features will pile up as businesses realize they’ve been duped by promises of "code without coders".

Once that happens, it’s back to the professionals to clean up the mess. That’s assuming they can even decipher the spaghetti code churned out by a hallucinating LLM on a vibes-only diet.

Conclusion: Vibe coding isn’t the future of software development—it’s a novelty at best and a liability at worst. While the idea of democratizing code is commendable, pretending AI can replace the deep, analytical work of a skilled programmer is a dangerous delusion. LLMs can be powerful tools, but they’re not replacements for expertise.

So, to all the vibe coders out there: keep vibing, but don’t quit your day job. Or better yet, learn to code the old-fashioned way. Because when production apps go down and security breaches make headlines, the vibes won’t save you.

After all, magic is only impressive until the curtain falls.

Vulnerability-as-a-Service

Vibe Coding: Vulnerability-as-a-Service (VaaS) for the Modern Dev

In the age of move-fast-break-everything development, “Vibe Coding” has unofficially become the gold standard of software deployment—and by gold, we mean fool’s gold wrapped in broken promises and CVEs. It’s the art of writing code with vibes, not structure; pushing to production before the first test even runs; and relying on AI-generated snippets that look functional but carry the subtle elegance of a time bomb.

At its core, Vibe Coding is Vulnerability-as-a-Service: a gift that keeps on giving to every bug hunter and black hat skimming Shodan for open ports. Security isn't an afterthought—it's not a thought at all. Developers lean into AI tools, unaware or indifferent to the fact they’re copy-pasting insecure legacy code mixed with modern syntax, like wiring your smart home with damp spaghetti.

But this isn’t a tragedy. It’s a business model. Bug bounty platforms thrive, infosec consultants get billable hours, and companies proudly claim “iterative development” while quietly rebuilding their backend after the fifth breach. Meanwhile, the devs keep vibing—because if it runs, it ships. If it breaks, it’s someone else’s job.

Vibe Coding is not just poor practice. It’s an entire economy based on unverified inputs, infinite trust in unchecked dependencies, and the ever-growing confidence that someone, somewhere, will eventually secure it later. Probably. Maybe.